Fixar IP amb reserva DHCP a libvirtd

En el cas que volguem mantenir la assiganció per DHCP de la IP:

Sota root o amb afegir sudo. default és el nom de la xarxa, Debian12 és el nom de la VM amb iface de MAC 52:54:00:a0:cc:55 ·

# virsh net-list

# virsh net-dumpxml default

# virsh net-edit default

afegir la linia

<host mac='52:54:00:a0:cc:55' name='Debian12' ip='192.168.122.199'/>al bloc ip:

<ip address='192.168.122.1' netmask='255.255.255.0'\>

<dhcp>

<range start='192.168.122.100' end='192.168.122.254'/>

<host mac='52:54:00:a0:cc:55' name='Debian12' ip='192.168.122.199'/>

</dhcp>Reiniciar la xarxa:

# virsh net-destroy default

# virsh net-start default

Falta neu i aigua!

Activant cloudflare per aran.cat

Activada la protecció.

Els Reis…

… ja han deixat la lectura 🤗

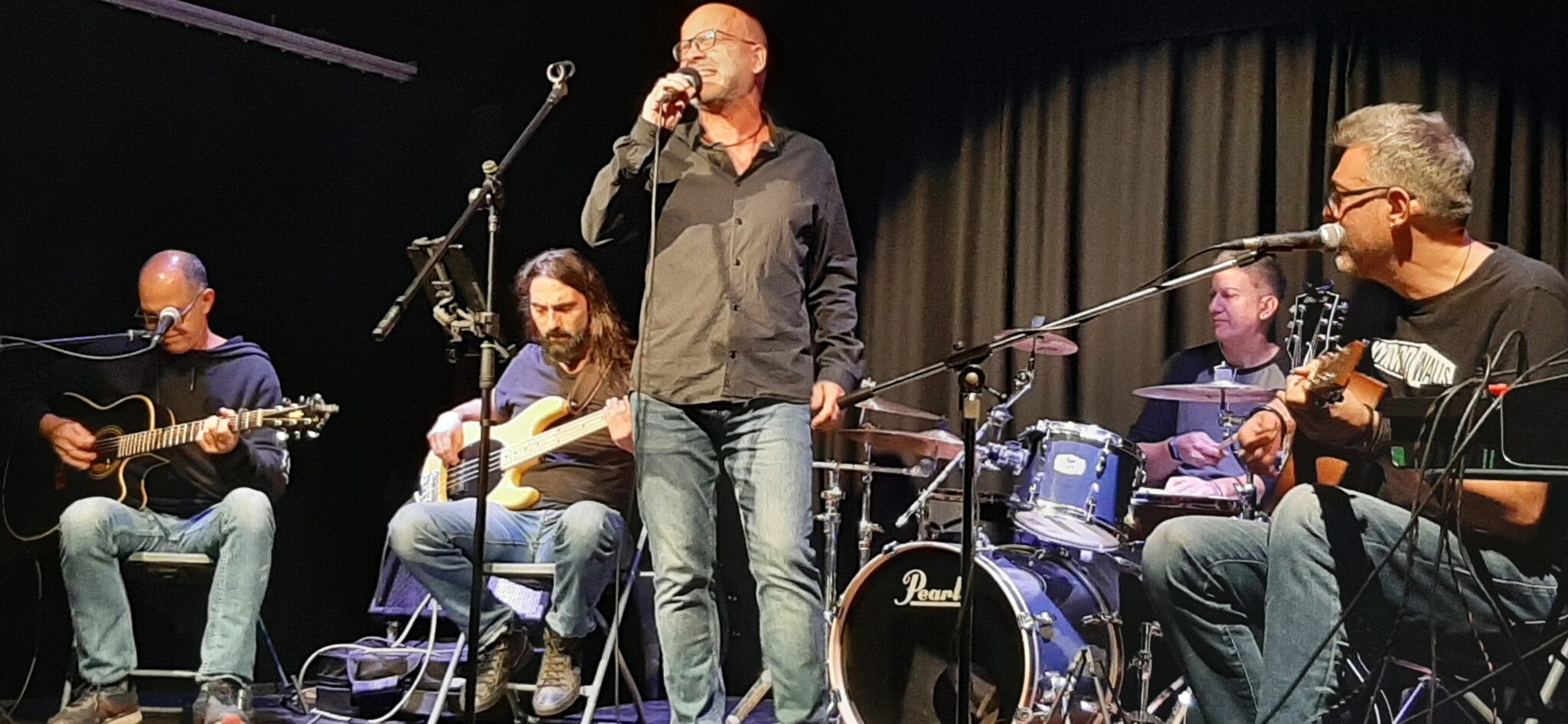

El gordo de Minnesota….

A l’Alvacot de Castellar…

Passeig matinal

doncs això… el forner tanca dimarts!

Nou ISP, configurat ddns

El nou host és rsm.myftp.org

Escoltant … discs!!!

… i després seguirem amb Extremoduro!